零、环境

0.1软件版本

hadoop3.0

java 1.8.241

flink-1.12.3

zeppelin-0.9.0-bin-all

kafka.1.1.1(详见kafka集群部署)

0.2硬件

192.168.0.24 8c32G500SSD hadoop-master

192.168.0.25 8c32G500SSD hadoop-client-1

192.168.0.27 8c32G500SSD hadoop-client-2

0.3 架构方式

workers集群

一、部署初始化

1.0 各个服务器免密

# ssh-keygen -t rsa

# ssh-copy-id node01

# 保证这三个文件每个服务器一致

.ssh/

total 16

-rw-r--r--. 1 root root 400 May 7 18:46 authorized_keys

-rw-------. 1 root root 1675 May 7 18:32 id_rsa

-rw-r--r--. 1 root root 400 May 7 18:32 id_rsa.pub

1.1 java安装

1.2 修改环境变量

cat >> /etc/profile << EOF

export JAVA_HOME=/usr/java/jdk1.8.0_241-amd64/

export FLINK_HOME=/export/servers/flink-1.12.3/

export CLASSPATH=$JAVA_HOME/lib

export HADOOP_HOME=/export/servers/hadoop-3.3.0

export HADOOP_CONF_DIR=${HADOOP_HOME}/etc/hadoop

export ZEPPELIN_HOME=/export/servers/zeppelin-0.9.0-bin-all

export KAFKA_HOME=/export/servers/kafka

export ZK_HOME=/export/servers/zookeeper/

export PATH=$PATH:$JAVA_HOME/bin:$ZK_HOME/bin:

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$FLINK_HOME/bin:$ZEPPELIN_HOME/bin:$ZK_HOME/bin:$KAFKA_HOME/bin

EOF

1.3 检查主机名访问

#cat /etc/localhost

192.168.0.24 hadoop-master

192.168.0.25 hadoop-client-1

192.168.0.27 hadoop-client-2

1.4下载相关软件

java 需手动上传

单独安装:rpm -ivh jdk-8u241-linux-x64.rpm

# hadoop

wget https://downloads.apache.org/hadoop/common/hadoop-3.3.0/hadoop-3.3.0.tar.gz

# flink

wget https://mirror-hk.koddos.net/apache/flink/flink-1.12.3/flink-1.12.3-bin-scala_2.11.tgz

# zeppelin

wget https://mirror-hk.koddos.net/apache/zeppelin/zeppelin-0.9.0/zeppelin-0.9.0-bin-all.tgz

1.5配置时间服务器等

## 安装 yum install -y ntp ## 启动定时任务 crontab -e ## 随后在输入界面键入 */1 * * * * /usr/sbin/ntpdate ntp4.aliyun.com; # nc安装 yum install -y nc # 文件夹规划 mkdir -p /export/servers # 安装目录 mkdir -p /export/softwares # 软件包存放目录 mkdir -p /export/scripts # 启动脚本目录

二、配置hadoop

2.1 解压

tar -xf hadoop-3.3.0.tar.gz -C /export/servers/ tar -xf flink-1.12.3-bin-scala_2.11.tgz -C /export/servers/ tar -xf zeppelin-0.9.0-bin-all.tgz -C /export/servers/ ll /export/servers/

2.2核对环境变量

cat /etc/profile

export JAVA_HOME=/usr/java/jdk1.8.0_241-amd64/

export FLINK_HOME=/export/servers/flink-1.12.3/

export CLASSPATH=$JAVA_HOME/lib

export HADOOP_HOME=/export/servers/hadoop-3.3.0

export HADOOP_CONF_DIR=${HADOOP_HOME}/etc/hadoop

export ZEPPELIN_HOME=/export/servers/zeppelin-0.9.0-bin-all

export KAFKA_HOME=/export/servers/kafka

export ZK_HOME=/export/servers/zookeeper/

export PATH=$PATH:$JAVA_HOME/bin:$ZK_HOME/bin:

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$FLINK_HOME/bin:$ZEPPELIN_HOME/bin:$ZK_HOME/bin:$KAFKA_HOME/bin

2.3配置hadoop

cd /export/servers/hadoop-3.3.0/

vim etc/hadoop/core-site.xml <configuration> <property> <name>fs.default.name</name> <value>hdfs://hadoop-master:8020</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/export/servers/hadoop-3.3.0/hadoopDatas/tempDatas</value> </property> <!-- 缓冲区大小,实际工作中根据服务器性能动态调整 --> <property> <name>io.file.buffer.size</name> <value>4096</value> </property> <!-- 开启hdfs的垃圾桶机制,删除掉的数据可以从垃圾桶中回收,单位分钟 --> <property> <name>fs.trash.interval</name> <value>10080</value> </property> </configuration>

# vim sbin/start-dfs.sh

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

# vim sbin/stop-dfs.sh

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

# vim sbin/start-yarn.sh

YARN_RESOURCEMANAGER_USER=root

HDFS_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

# vim sbin/stop-yarn.sh

YARN_RESOURCEMANAGER_USER=root

HDFS_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

# vim etc/hadoop/hdfs-site.xml

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>node01:50090</value>

</property>

<property>

<name>dfs.namenode.http-address</name>

<value>hadoop-master:50070</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///export/servers/hadoop-3.3.0/hadoopDatas/namenodeDatas,file:///export/servers/hadoop-3.3.0/hadoopDatas/namenodeDatas2</value>

</property>

<!-- 定义dataNode数据存储的节点位置,实际工作中,一般先确定磁盘的挂载目录,然后多个目录用,进行分割 -->

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///export/servers/hadoop-3.3.0/hadoopDatas/datanodeDatas,file:///export/servers/hadoop-3.3.0/hadoopDatas/datanodeDatas2</value>

</property>

<property>

<name>dfs.namenode.edits.dir</name>

<value>file:///export/servers/hadoop-3.3.0/hadoopDatas/nn/edits</value>

</property>

<property>

<name>dfs.namenode.checkpoint.dir</name>

<value>file:///export/servers/hadoop-3.3.0/hadoopDatas/snn/name</value>

</property>

<property>

<name>dfs.namenode.checkpoint.edits.dir</name>

<value>file:///export/servers/hadoop-3.3.0/hadoopDatas/dfs/snn/edits</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

<property>

<name>dfs.blocksize</name>

<value>134217728</value>

</property>

# vim etc/hadoop/hdfs-site.xml

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>hadoop-master:50090</value>

</property>

<property>

<name>dfs.namenode.http-address</name>

<value>hadoop-master:50070</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///export/servers/hadoop-3.3.0/hadoopDatas/namenodeDatas,file:///export/servers/hadoop-3.3.0/hadoopDatas/namenodeDatas2</value>

</property>

<!-- 定义dataNode数据存储的节点位置,实际工作中,一般先确定磁盘的挂载目录,然后多个目录用,进行分割 -->

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///export/servers/hadoop-3.3.0/hadoopDatas/datanodeDatas,file:///export/servers/hadoop-3.3.0/hadoopDatas/datanodeDatas2</value>

</property>

<property>

<name>dfs.namenode.edits.dir</name>

<value>file:///export/servers/hadoop-3.3.0/hadoopDatas/nn/edits</value>

</property>

<property>

<name>dfs.namenode.checkpoint.dir</name>

<value>file:///export/servers/hadoop-3.3.0/hadoopDatas/snn/name</value>

</property>

<property>

<name>dfs.namenode.checkpoint.edits.dir</name>

<value>file:///export/servers/hadoop-3.3.0/hadoopDatas/dfs/snn/edits</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

<property>

<name>dfs.blocksize</name>

<value>134217728</value>

</property>

# vim etc/hadoop/hadoop-env.sh

export JAVA_HOME=/usr/java/jdk1.8.0_241-amd64/

export HADOOP_SSH_OPTS="-p 32539"

# vim etc/hadoop/yarn-site.xml

<property>

<name>yarn.resourcemanager.hostname</name>

<value>node01</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>32</value>

<description>该节点上Yarn可使用的CPU个数</description>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-vcores</name>

<value>1</value>

<description>单任务可申请的最小虚拟CPU个数</description>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-vcores</name>

<value>4</value>

<description>单任务可申请的最大虚拟CPU个数</description>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>52000</value>

<description>该节点上Yarn可使用的物理内存</description>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>2048</value>

<description>单任务可申请的最小物理内存</description>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>640000</value>

<description>单任务可申请的最大物理内存</description>

</property>

# vim mapred-site.xml<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

# vim etc/hadoop/workers

hadoop-client-1

hadoop-client-2

# source /etc/profile

2.4启动并验证hadoop

# 主服务器 hadoop namenode -format sbin/start-dfs.sh

sbin/start-yarn.sh

# 主

# jps

13650 Jps

11308 SecondaryNameNode

11645 ResourceManager

11006 NameNode

# worker2

# jps

7561 DataNode

7740 NodeManager

9487 Jps

# jps

24664 Jps

22748 DataNode

22925 NodeManager

2.5配置flink集群和zeppelin

# flink

# vim conf/flink-conf.yaml

jobmanager.rpc.address: 192.168.0.24

jobmanager.rpc.port: 6123

jobmanager.memory.process.size: 14g

taskmanager.memory.process.size: 16g

taskmanager.numberOfTaskSlots: 16

parallelism.default: 2

jobmanager.execution.failover-strategy: region

rest.port: 8081

taskmanager.memory.network.fraction: 0.15

taskmanager.memory.network.min: 128mb

taskmanager.memory.network.max: 2gb

rest.bind-port: 50100-50200

# zeppelin

# cd/export/servers/zeppelin-0.9.0-bin-all

# vim conf/zeppelin-env.sh

export JAVA_HOME=/usr/java/jdk1.8.0_241-amd64/ export USE_HADOOP=true export ZEPPELIN_ADDR=192.168.0.24 export ZEPPELIN_PORT=8082 export ZEPPELIN_LOCAL_IP=192.168.0.24 export ZEPPELIN_JAVA_OPTS="-Dspark.executor.memory=8g -Dspark.cores.max=8" export ZEPPELIN_MEM="-Xms1024m -Xmx4096m -XX:MaxMetaspaceSize=512m" export HADOOP_CONF_DIR=/export/servers/hadoop-3.3.0/etc/hadoop export ZEPPELIN_INTERPRETER_OUTPUT_LIMIT=2500000 # vim conf/shiro.ini admin = password1, admin

2.6启动并验证flink集群和zeppelin

# 启动命令 bin/yarn-session.sh -tm 2048 -s 4 -d bin/zeppelin-daemon.sh start

三、使用yarn模式调试

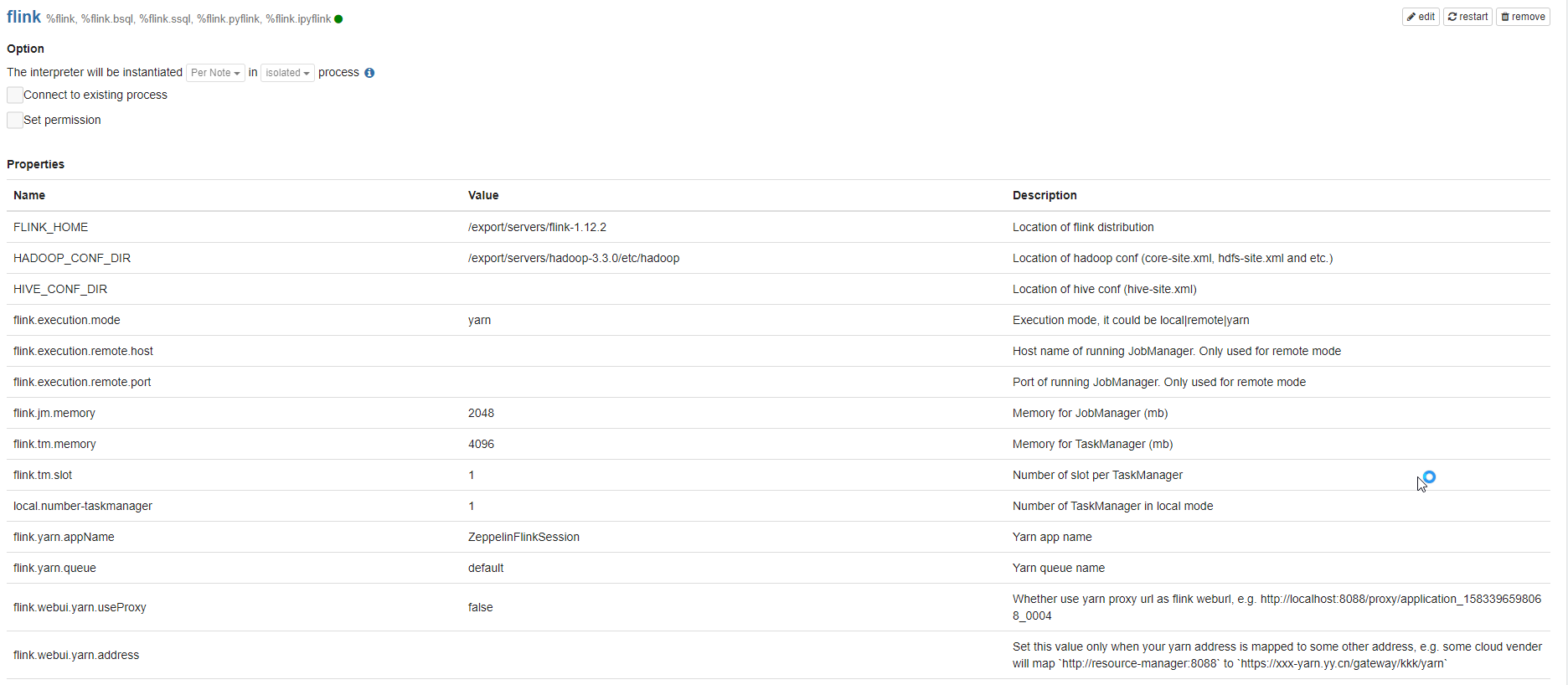

http://<ip>:8082/#/interpreter

flink.conf

%flink.conf flink.execution.mode yarn heartbeat.timeout 180000 flink.execution.packages org.apache.flink:flink-connector-jdbc_2.11:1.12.0,mysql:mysql-connector-java:8.0.16,org.apache.flink:flink-sql-connector-kafka_2.11:1.12.0,org.apache.flink:flink-sql-connector-elasticsearch7_2.12:1.12.1 table.exec.source.cdc-events-duplicate true taskmanager.memory.task.off-heap.size 512MB table.exec.mini-batch.enabled true table.exec.mini-batch.allow-latency 5000 table.exec.mini-batch.size 50000 flink.jm.memory 2048 flink.tm.memory 4096 flink.tm.slot 2

flink.yarn.appName t_apibet_report

书写%flink.ssql

http://<ip>:8081调试

复杂表还是要拆表,拿yarn直接跑

四、实用命令

# jps 24642 Kafka 2818 RemoteInterpreterServer 1988 YarnSessionClusterEntrypoint 24452 YarnTaskExecutorRunner 23688 ZeppelinServer 21448 Jps 10952 NameNode 3530 YarnSessionClusterEntrypoint 20365 RemoteInterpreterServer 18961 NodeManager 11155 DataNode 28247 RemoteInterpreterServer 3864 YarnTaskExecutorRunner 32155 RemoteInterpreterServer 30491 RemoteInterpreterServer 13404 YarnTaskExecutorRunner 15964 YarnTaskExecutorRunner 21084 YarnSessionClusterEntrypoint 414 YarnSessionClusterEntrypoint 18783 ResourceManager 24040 QuorumPeerMain 26152 YarnTaskExecutorRunner 29160 YarnSessionClusterEntrypoint 14825 YarnTaskExecutorRunner 25322 CanalLauncher 24746 RemoteInterpreterServer 31214 YarnSessionClusterEntrypoint 25839 YarnSessionClusterEntrypoint 25136 CanalAdminApplication 1267 RemoteInterpreterServer 31604 YarnTaskExecutorRunner 11515 SecondaryNameNode # yarn app -list # yarn app -kill <Application-Id>