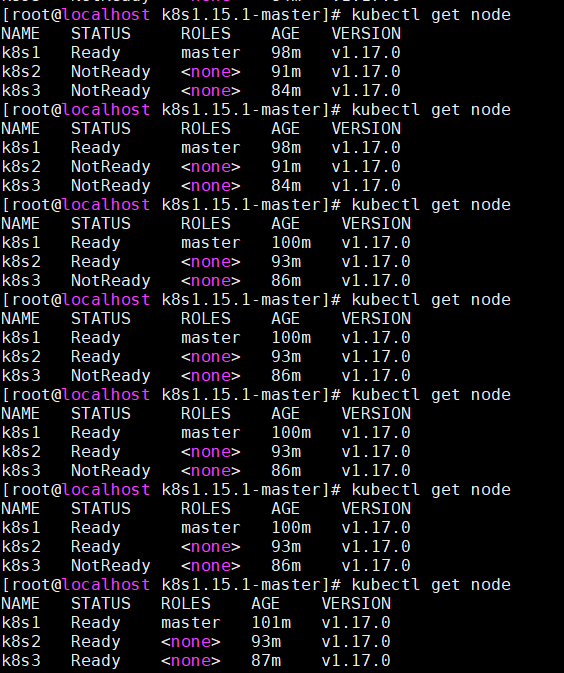

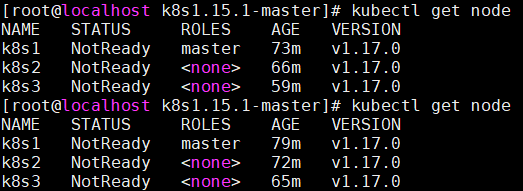

问题如下:

查找原因:

[root@localhost k8s1.15.1-master]# journalctl -f -u kubelet -- Logs begin at 三 2020-01-29 10:32:33 CST. -- 1月 29 13:22:09 k8s1 kubelet[95442]: E0129 13:22:09.758834 95442 secret.go:195] Couldn't get secret kube-system/flannel-token-4g7bs: failed to sync secret cache: timed out waiting for the condition 1月 29 13:22:09 k8s1 kubelet[95442]: E0129 13:22:09.758868 95442 nestedpendingoperations.go:270] Operation for ""kubernetes.io/secret/96cbc74a-58d5-4d10-b7df-52732ba63938-flannel-token-4g7bs" ("96cbc74a-58d5-4d10-b7df-52732ba63938")" failed. No retries permitted until 2020-01-29 13:22:10.258852307 +0800 CST m=+3.230320911 (durationBeforeRetry 500ms). Error: "MountVolume.SetUp failed for volume "flannel-token-4g7bs" (UniqueName: "kubernetes.io/secret/96cbc74a-58d5-4d10-b7df-52732ba63938-flannel-token-4g7bs") pod "kube-flannel-ds-amd64-j7bl2" (UID: "96cbc74a-58d5-4d10-b7df-52732ba63938") : failed to sync secret cache: timed out waiting for the condition" 1月 29 13:22:09 k8s1 kubelet[95442]: E0129 13:22:09.758890 95442 configmap.go:200] Couldn't get configMap kube-system/kube-proxy: failed to sync configmap cache: timed out waiting for the condition 1月 29 13:22:09 k8s1 kubelet[95442]: E0129 13:22:09.758926 95442 nestedpendingoperations.go:270] Operation for ""kubernetes.io/configmap/8167b71d-457f-48d3-85bd-ef793c18a2a6-kube-proxy" ("8167b71d-457f-48d3-85bd-ef793c18a2a6")" failed. No retries permitted until 2020-01-29 13:22:10.258909639 +0800 CST m=+3.230378243 (durationBeforeRetry 500ms). Error: "MountVolume.SetUp failed for volume "kube-proxy" (UniqueName: "kubernetes.io/configmap/8167b71d-457f-48d3-85bd-ef793c18a2a6-kube-proxy") pod "kube-proxy-tvvth" (UID: "8167b71d-457f-48d3-85bd-ef793c18a2a6") : failed to sync configmap cache: timed out waiting for the condition" 1月 29 13:22:09 k8s1 kubelet[95442]: E0129 13:22:09.758943 95442 configmap.go:200] Couldn't get configMap kube-system/coredns: failed to sync configmap cache: timed out waiting for the condition 1月 29 13:22:09 k8s1 kubelet[95442]: E0129 13:22:09.758980 95442 nestedpendingoperations.go:270] Operation for ""kubernetes.io/configmap/eb8dcc9b-b573-4e4a-a292-f8fcce423783-config-volume" ("eb8dcc9b-b573-4e4a-a292-f8fcce423783")" failed. No retries permitted until 2020-01-29 13:22:10.258961581 +0800 CST m=+3.230430190 (durationBeforeRetry 500ms). Error: "MountVolume.SetUp failed for volume "config-volume" (UniqueName: "kubernetes.io/configmap/eb8dcc9b-b573-4e4a-a292-f8fcce423783-config-volume") pod "coredns-5c98db65d4-qdp89" (UID: "eb8dcc9b-b573-4e4a-a292-f8fcce423783") : failed to sync configmap cache: timed out waiting for the condition" 1月 29 13:22:11 k8s1 kubelet[95442]: E0129 13:22:11.463974 95442 csi_plugin.go:267] Failed to initialize CSINodeInfo: error updating CSINode annotation: timed out waiting for the condition; caused by: the server could not find the requested resource 1月 29 13:22:16 k8s1 kubelet[95442]: E0129 13:22:16.463855 95442 csi_plugin.go:267] Failed to initialize CSINodeInfo: error updating CSINode annotation: timed out waiting for the condition; caused by: the server could not find the requested resource 1月 29 13:22:21 k8s1 kubelet[95442]: E0129 13:22:21.471563 95442 csi_plugin.go:267] Failed to initialize CSINodeInfo: error updating CSINode annotation: timed out waiting for the condition; caused by: the server could not find the requested resource 1月 29 13:22:26 k8s1 kubelet[95442]: E0129 13:22:26.665812 95442 csi_plugin.go:267] Failed to initialize CSINodeInfo: error updating CSINode annotation: timed out waiting for the condition; caused by: the server could not find the requested resource 1月 29 13:22:32 k8s1 kubelet[95442]: E0129 13:22:32.266202 95442 csi_plugin.go:267] Failed to initialize CSINodeInfo: error updating CSINode annotation: timed out waiting for the condition; caused by: the server could not find the requested resource 1月 29 13:22:53 k8s1 kubelet[95442]: E0129 13:22:53.483584 95442 csi_plugin.go:267] Failed to initialize CSINodeInfo: error updating CSINode annotation: timed out waiting for the condition; caused by: the server could not find the requested resource 1月 29 13:22:53 k8s1 kubelet[95442]: F0129 13:22:53.483602 95442 csi_plugin.go:281] Failed to initialize CSINodeInfo after retrying 1月 29 13:22:53 k8s1 systemd[1]: kubelet.service: main process exited, code=exited, status=255/n/a 1月 29 13:22:53 k8s1 systemd[1]: Unit kubelet.service entered failed state. 1月 29 13:22:53 k8s1 systemd[1]: kubelet.service failed.

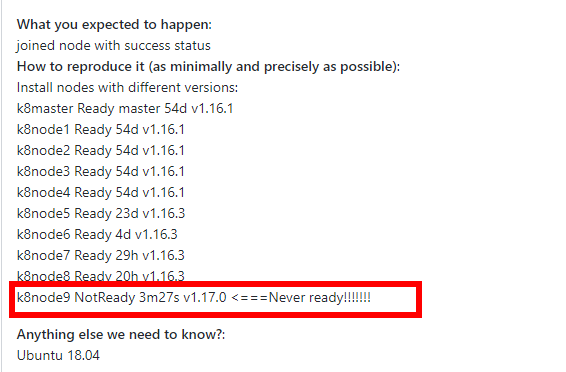

https://github.com/kubernetes/kubernetes/issues/86094

发现这个链接也有这个问题

解决:

After experiencing the same issue, editing /var/lib/kubelet/config.yaml to add: featureGates: CSIMigration: false

然后重启3个节点的kubelet就可以解决了