1.增加计算节点

1.1 更改hosts解析

hostnamectl set-hostname compute2

cat>>/etc/hosts<<EOF

10.0.0.11 controller

10.0.0.31 compute1

10.0.0.32 compute2

EOF

1.2 配置yum源

[root@compute2 ~]# cat /etc/yum.repos.d/openstack.repo

[Base_ISO]

name=base_iso

baseurl=file:///mnt/

gpgcheck=0

[Openstack_Mitaka]

name=openstack mitaka

baseurl=ftp://10.0.0.11/pub/openstackmitaka/Openstack-Mitaka/

gpgcheck=0

[root@compute2 ~]# mount /dev/sr0 /mnt/

yum makecache fast

1.3 配置时间同步

yum -y install chrony ntpdate

[root@compute2 ~]# vi /etc/chrony.conf

server 10.0.0.11 iburst

systemctl restart chronyd

1.4 安装openstack客户端和openstack-selinux

yum install python-openstackclient.noarch openstack-selinux.noarch -y

1.5 安装&配置安装nova-compute

yum install openstack-nova-compute openstack-utils.noarch libvirt-client -y

[root@compute2 ~]# cat>/etc/nova/nova.conf<<EOF

[DEFAULT]

rpc_backend = rabbit

auth_strategy = keystone

my_ip = 10.0.0.32

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[api_database]

[barbican]

[cache]

[cells]

[cinder]

[conductor]

[cors]

[cors.subdomain]

[database]

[ephemeral_storage_encryption]

[glance]

api_servers = http://controller:9292

[guestfs]

[hyperv]

[image_file_url]

[ironic]

[keymgr]

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = nova

[libvirt]

virt_type = qemu

cpu_mode = none

[matchmaker_redis]

[metrics]

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = neutron

[osapi_v21]

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[oslo_messaging_amqp]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = RABBIT_PASS

[oslo_middleware]

[oslo_policy]

[rdp]

[serial_console]

[spice]

[ssl]

[trusted_computing]

[upgrade_levels]

[vmware]

[vnc]

enabled = True

vncserver_listen = 0.0.0.0

vncserver_proxyclient_address = \$my_ip

novncproxy_base_url = http://10.0.0.11:6080/vnc_auto.html

[workarounds]

[xenserver]

EOF

# 启动

systemctl start openstack-nova-compute libvirtd

systemctl enable openstack-nova-compute libvirtd

1.6 安装neutron-linuxbridge-agent

yum install openstack-neutron-linuxbridge ebtables ipset -y

[root@compute2 ~]# cat>/etc/neutron/neutron.conf<<EOF

[DEFAULT]

rpc_backend = rabbit

auth_strategy = keystone

[agent]

[cors]

[cors.subdomain]

[database]

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = neutron

[matchmaker_redis]

[nova]

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

[oslo_messaging_amqp]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = RABBIT_PASS

[oslo_policy]

[quotas]

[ssl]

EOF

[root@compute2 ~]# cat>/etc/neutron/plugins/ml2/linuxbridge_agent.ini<<EOF

[DEFAULT]

[agent]

[linux_bridge]

physical_interface_mappings = provider:eth0

[securitygroup]

enable_security_group = True

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

[vxlan]

enable_vxlan = False

EOF

1.7 启动

systemctl start neutron-linuxbridge-agent

systemctl enable neutron-linuxbridge-agent

1.8 主机聚合

通过创建主机聚合可以让新创建的实例指定的调度到聚合组中的计算节点中去。

2.项目用户角色的关系

openstack 中只有两个角色

- admin - user一个项目有多个成员用户,user 角色用户只能管理所在项目组中的虚拟资源,能够创建配额内的计算资源,而且不能看到其它非授权项目的资源。在管理菜单中只有项目选项卡。无法对虚拟机进行迁移。

admin 角色能够给相应的项目配额,防止user角色对资源的过度使用。在菜单栏中有管理员选项卡,在管理员中可以看到所有的计算资源,但是该用户项目选项卡中不能看到其它项目的资源。

3.glance 服务迁移

3.1 停止原有服务

glance-api glance-registry

3.2 在新主机上安装数据库

yum -y install mariadb-server mariadb python2-PyMySQL

vi /etc/my.cnf

[mysqld]

bind-address = 10.0.0.32

default-storage-engine = innodb

innodb_file_per_table

max_connections = 4096

collation-server = utf8_gene

# 初始化数据库

mysql_secure_installation

## 设置数据库密码

Set root password? [Y/n] y

New password: # <===== openstack

Re-enter new password:

Password updated successfully!

Remove anonymous users? [Y/n] y

Disallow root login remotely? [Y/n] y

Remove test database and access to it? [Y/n] y

Reload privilege tables now? [Y/n] y

3.3 恢复glance数据库数据

# 导出原glance数据库

mysqldump -uroot -p -B glance > glance.sql

scp glance.sql root@compute2:~

# 新机器导入数据库

mysql -uroot -p < glance.sql

[root@compute2 ~]# mysql glance -uroot -p -e 'show tables;'

Enter password:

+----------------------------------+

| Tables_in_glance |

+----------------------------------+

| artifact_blob_locations |

| artifact_blobs |

| artifact_dependencies |

| artifact_properties |

| artifact_tags |

| artifacts |

| image_locations |

| image_members |

| image_properties |

| image_tags |

| images |

| metadef_namespace_resource_types |

| metadef_namespaces |

| metadef_objects |

| metadef_properties |

| metadef_resource_types |

| metadef_tags |

| migrate_version |

| task_info |

| tasks |

+----------------------------------+

# 创建glance用户

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' IDENTIFIED BY 'GLANCE_DBPASS';

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'GLANCE_DBPASS';

3.4 安装配置glance

yum -y install openstack-glance.noarch

scp controller:/etc/glance/glance-api.conf /etc/glance/

scp controller:/etc/glance/glance-registry.conf /etc/glance/

# 只需要更改数据库连接即可

vi /etc/glance/glance-api.conf

......

[database]

connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

......

vi /etc/glance/glance-registry.conf

......

connection = mysql+pymysql://glance:GLANCE_DBPASS@compute2/glance

......

# 启动服务

systemctl start openstack-glance-api.service openstack-glance-registry.service

systemctl enable openstack-glance-api.service openstack-glance-registry.service

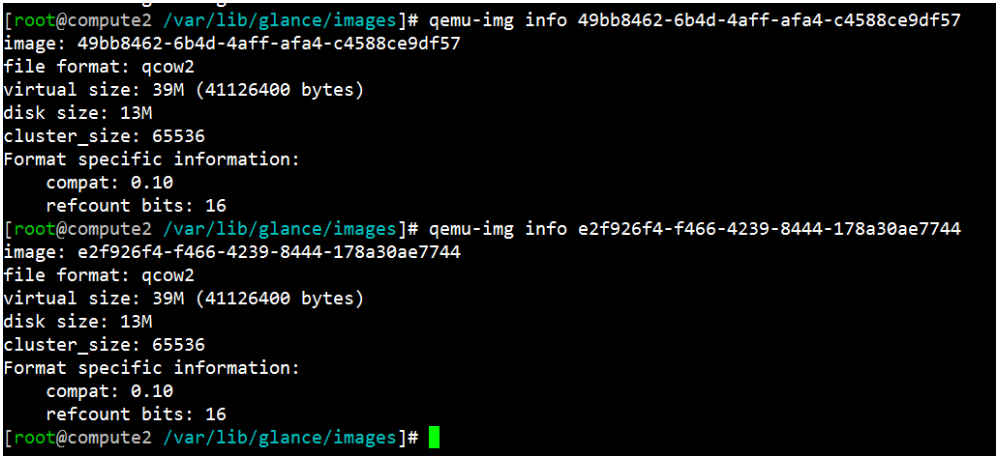

3.5 镜像文件迁移

-p 保持文件属性

scp -p root@10.0.0.11:/var/lib/glance/images/* /var/lib/glance/images/

chown -R glance.glance glance/

3.6 注册新的服务目录

a. 方法一:命令更改

# 查看现有glance endpoint

openstack endpoint list|grep glance

# 删除

openstack endpoint delete 2879c39c7dc64db8a5f82d479b6d2f08

# 后面的是ID 用上面的命令查询出来的

#注册新的glance endpoint

[root@controller ~]# openstack endpoint create --region RegionOne image public http://compute2:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 8930f09db8f34ed9a75f0c5060dc40b2 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | f7636aa78fc946e5a9f0464b0c2a5209 |

| service_name | glance |

| service_type | image |

| url | http://compute2:9292 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne image internal http://compute2:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 8cb738ccb9a748379ec9c9ddc35b0e85 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | f7636aa78fc946e5a9f0464b0c2a5209 |

| service_name | glance |

| service_type | image |

| url | http://compute2:9292 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne image admin http://compute2:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 95223313eedc42259d43e9f73d4adf3b |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | f7636aa78fc946e5a9f0464b0c2a5209 |

| service_name | glance |

| service_type | image |

| url | http://compute2:9292 |

+--------------+----------------------------------+

b.方法二:直接修改数据库

keystone库 endpoin表中记录了

%s###gc

c # 交换式替换

% # 全局

3.7 更改控制节点和计算节点配置文件glance-api地址

- 涉及配置文件nova-api

yum -y install openstack-utils

openstack-config --set /etc/nova/nova.conf glance api_servers http://compute2:9292

# 重启服务

## 控制节点

openstack-nova-api

## 计算节点

openstack-nova-compute

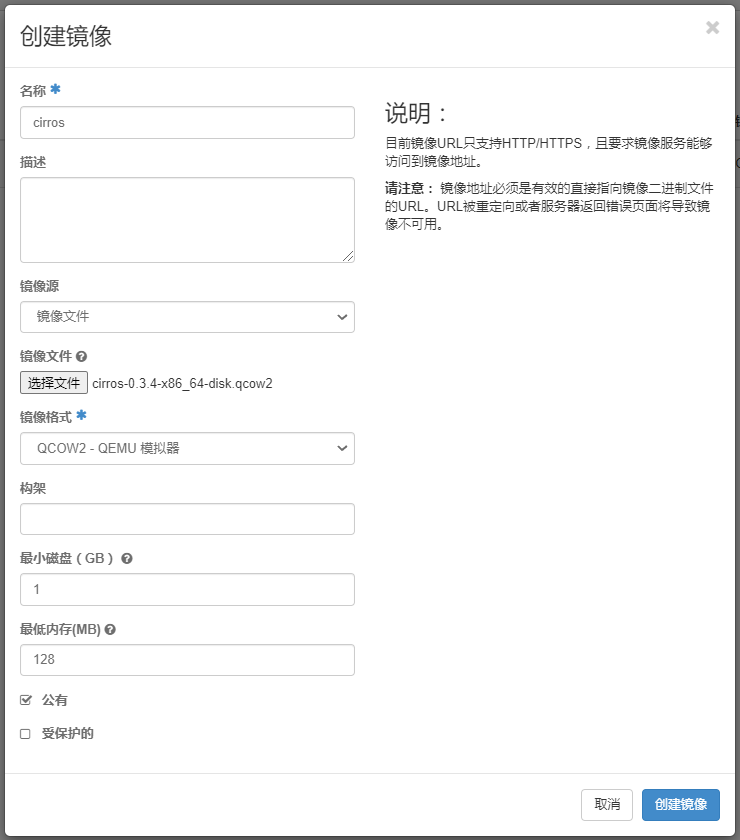

3.8 验证

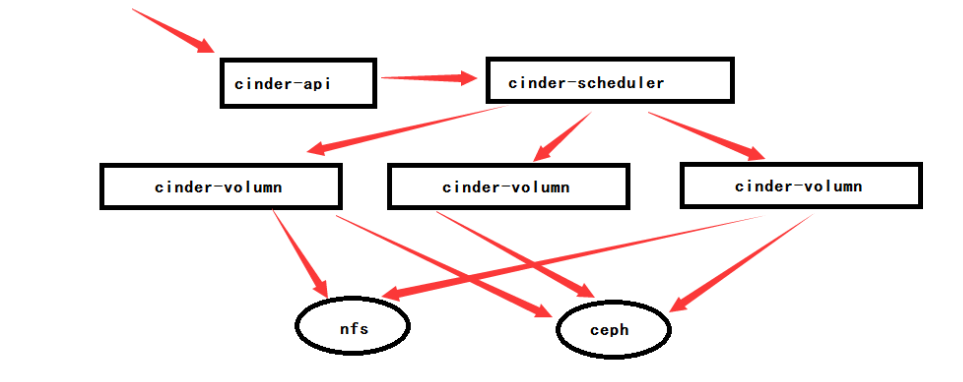

4.cinder 块存储服务

控制节点操作

cinder-api: 接收和响应外部有关块存储请求

cinder-volume: 提供存储空间,与其它存储对接,比如ceph

cinder-scheduler:调度器,决定将要分配的空间由哪一个cinder-volume提供,选择最优存储提供节点来创建卷。

cinder-backup: 备份存储

块存储服务(cinder)为实例提供块存储。存储的分配和消耗是由块存储驱动器,或者多后端配置的驱动器决定的。还有很多驱动程序可用:NAS/SAN,NFS,ISCSI,Ceph等。

典型情况下,块服务API和调度器服务运行在控制节点上。取决于使用的驱动,卷服务器可以运行在控制节点、计算节点或单独的存储节点。

4.1 创建数据库

mysql -u root -p

CREATE DATABASE cinder;

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' IDENTIFIED BY 'CINDER_DBPASS';

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' IDENTIFIED BY 'CINDER_DBPASS';

4.2 创建用户&服务目录

[root@controller ~]# . admin-openrc

[root@controller ~]# openstack user create --domain default --password cinder cinder

+-----------+----------------------------------+

| Field | Value |

+-----------+----------------------------------+

| domain_id | 1510ca4a5403403b9bb65719bfdf67fe |

| enabled | True |

| id | 1df51977b51b4efc98b94cf408226b17 |

| name | cinder |

+-----------+----------------------------------+

[root@controller ~]# openstack role add --project service --user cinder admin

# 创建了v1和v2 版本服务

[root@controller ~]# openstack service create --name cinder --description "OpenStack Block Storage" volume

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Block Storage |

| enabled | True |

| id | 2fc13b591ef5476bb352ddae87d485ee |

| name | cinder |

| type | volume |

+-------------+----------------------------------+

[root@controller ~]# openstack service create --name cinderv2 --description "OpenStack Block Storage" volumev2

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Block Storage |

| enabled | True |

| id | 3f99b7fdf9974442989de7f8b682638b |

| name | cinderv2 |

| type | volumev2 |

+-------------+----------------------------------+

# 创建v1版本api

[root@controller ~]# openstack endpoint create --region RegionOne volume public http://controller:8776/v1/%\(tenant_id\)s

+--------------+-----------------------------------------+

| Field | Value |

+--------------+-----------------------------------------+

| enabled | True |

| id | 7b171d5694894c5fbabb703b389db78f |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 2fc13b591ef5476bb352ddae87d485ee |

| service_name | cinder |

| service_type | volume |

| url | http://controller:8776/v1/%(tenant_id)s |

+--------------+-----------------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne volume internal http://controller:8776/v1/%\(tenant_id\)s

+--------------+-----------------------------------------+

| Field | Value |

+--------------+-----------------------------------------+

| enabled | True |

| id | 5a4de45c7ba54ffbb8e1e426d7ffc856 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 2fc13b591ef5476bb352ddae87d485ee |

| service_name | cinder |

| service_type | volume |

| url | http://controller:8776/v1/%(tenant_id)s |

+--------------+-----------------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne volume admin http://controller:8776/v1/%\(tenant_id\)s

+--------------+-----------------------------------------+

| Field | Value |

+--------------+-----------------------------------------+

| enabled | True |

| id | 638458bc46754431ba7f3f4eabed6a80 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 2fc13b591ef5476bb352ddae87d485ee |

| service_name | cinder |

| service_type | volume |

| url | http://controller:8776/v1/%(tenant_id)s |

+--------------+-----------------------------------------+

# 创建v2版本api

[root@controller ~]# openstack endpoint create --region RegionOne volumev2 public http://controller:8776/v2/%\(tenant_id\)s

+--------------+-----------------------------------------+

| Field | Value |

+--------------+-----------------------------------------+

| enabled | True |

| id | 57cbea08dd164f18bd70c08d1fc3708a |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 3f99b7fdf9974442989de7f8b682638b |

| service_name | cinderv2 |

| service_type | volumev2 |

| url | http://controller:8776/v2/%(tenant_id)s |

+--------------+-----------------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne volumev2 internal http://controller:8776/v2/%\(tenant_id\)s

+--------------+-----------------------------------------+

| Field | Value |

+--------------+-----------------------------------------+

| enabled | True |

| id | dae5b746526345c093be962f8dfe46b3 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 3f99b7fdf9974442989de7f8b682638b |

| service_name | cinderv2 |

| service_type | volumev2 |

| url | http://controller:8776/v2/%(tenant_id)s |

+--------------+-----------------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne volumev2 admin http://controller:8776/v2/%\(tenant_id\)s

+--------------+-----------------------------------------+

| Field | Value |

+--------------+-----------------------------------------+

| enabled | True |

| id | 1eff773038fc4541b9a47596ce7998e3 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 3f99b7fdf9974442989de7f8b682638b |

| service_name | cinderv2 |

| service_type | volumev2 |

| url | http://controller:8776/v2/%(tenant_id)s |

+--------------+-----------------------------------------

4.3 安装&配置

yum install openstack-cinder -y

cp /etc/cinder/cinder.conf{,_bak}

grep -Ev '^#|^$' /etc/cinder/cinder.conf_bak >/etc/cinder/cinder.conf

[root@controller ~]# cat /etc/cinder/cinder.conf

[DEFAULT]

rpc_backend = rabbit

auth_strategy = keystone

my_ip = 10.0.0.11

[BACKEND]

[BRCD_FABRIC_EXAMPLE]

[CISCO_FABRIC_EXAMPLE]

[COORDINATION]

[FC-ZONE-MANAGER]

[KEYMGR]

[cors]

[cors.subdomain]

[cinder]

os_region_name = RegionOne

[database]

connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = cinder

[matchmaker_redis]

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

[oslo_messaging_amqp]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = RABBIT_PASS

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[oslo_versionedobjects]

[ssl]

su -s /bin/sh -c "cinder-manage db sync" cinder

[root@controller ~]# mysql cinder -uroot -p -e 'show tables;'

4.4 配置控制节点nova-api

vi /etc/nova/nova.conf

[cinder]

os_region_name = RegionOne

# 重启服务

systemctl restart openstack-nova-api.service

systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service

systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service

4.5 配置一个存储节点

可以选择任意一台主机

注意节点的hosts 文件和yum私有源

a.安装lvm

yum install lvm2 -y

systemctl enable lvm2-lvmetad.service

systemctl start lvm2-lvmetad.service

b.配置lvm

# 添加新的磁盘,这种方式后可以不重启虚拟机添加硬盘

echo '- - -' >/sys/class/scsi_host/host0/scan

echo '- - -' >/sys/class/scsi_host/host1/scan

echo '- - -' >/sys/class/scsi_host/host2/scan

[root@compute2 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

fd0 2:0 1 4K 0 disk

sda 8:0 0 20G 0 disk

├─sda1 8:1 0 500M 0 part /boot

├─sda2 8:2 0 1G 0 part [SWAP]

└─sda3 8:3 0 18.5G 0 part /

sdb 8:16 0 20G 0 disk

sdc 8:32 0 20G 0 disk

pvcreate /dev/sdb

pvcreate /dev/sdc

vgcreate cinder-ssd /dev/sdb

vgcreate cinder-hdd /dev/sdc

[root@compute2 ~]# vgdisplay |grep 'VG Name'

VG Name cinder-hdd

VG Name cinder-ssd

vi /etc/lvm/lvm.conf

devices {

...

# 这里表示只允许sda、sdc做lvm的操作。

filter = [ "a/sdb/", "a/sdc/", "r/.*/" ]

只有实例可以访问块存储卷组。不过,底层的操作系统管理这些设备并将其与卷关联。默认情况下,LVM卷扫描工具会扫描

/dev目录,查找包含卷的块存储设备。如果项目在他们的卷上使用LVM,扫描工具检测到这些卷时会尝试缓存它们,可能会在底层操作系统和项目卷上产生各种问题。您必须重新配置LVM,让它只扫描包含cinder-volume卷组的设备。编辑/etc/lvm/lvm.conf

4.6 部署cinder组件

这里在存储节点部署的存储组件可以与控制节点的通信。

配置文件版本1:

yum install openstack-cinder targetcli python-keystone -y

targetcli # 提供iscsi服务的组件

[root@compute2 ~]# vim /etc/cinder/cinder.conf

[DEFAULT]

rpc_backend = rabbit

auth_strategy = keystone

my_ip = 10.0.0.32

# 这里标记了[ssd]和[hdd]两种类型的存储,与文件末尾的配置对应

enabled_backends = ssd,hdd

glance_api_servers = http://controller:9292

[BACKEND]

[BRCD_FABRIC_EXAMPLE]

[CISCO_FABRIC_EXAMPLE]

[COORDINATION]

[FC-ZONE-MANAGER]

[KEYMGR]

[cors]

[cors.subdomain]

[database]

connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = cinder

[matchmaker_redis]

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

[oslo_messaging_amqp]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = RABBIT_PASS

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[oslo_versionedobjects]

[ssl]

[ssd]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

# 这里配置的VG与刚才创的VG相对应。

volume_group = cinder-ssd

iscsi_protocol = iscsi

iscsi_helper = lioadm

[hdd]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = cinder-hdd

iscsi_protocol = iscsi

iscsi_helper = lioadm

配置文件版本2:

因为版本1配置根据官方配置第一次运行完全没有问题,但是在虚拟机挂起一次然后再启动的时候就开始报错。

2021-11-10 09:18:57.200 11973 WARNING cinder.scheduler.filter_scheduler [req-5cc304f8-952b-4706-bd6e-98181e88543a 6de5754abfd74a95b54b8ed0a8c8de33 66cb1c041d104fe386912fdaed97f71d - - -] No weighed hosts found for volume with properties: {u'name': u'lvm', u'qos_specs_id': None, u'deleted': False, u'created_at': u'2021-11-09T05:00:10.000000', u'updated_at': None, u'extra_specs': {u'enabled_backends': u'lvm'}, u'is_public': True, u'deleted_at': None, u'id': u'fa07c691-887f-40a8-a5c1-d0262aea5429', u'description': u''}

2021-11-10 09:18:57.203 11973 ERROR cinder.scheduler.flows.create_volume [req-5cc304f8-952b-4706-bd6e-98181e88543a 6de5754abfd74a95b54b8ed0a8c8de33 66cb1c041d104fe386912fdaed97f71d - - -] Failed to run task cinder.scheduler.flows.create_volume.ScheduleCreateVolumeTask;volume:create: No valid host was found. No weighed hosts available在网上查找了半天也没找到什么有效的解决方案。网上的主要有两种方式。

- 时间同步

- 在节点上的cinder-volume服务没有启动

- cinder service-list

+------------------+-------------+------+---------+-------+

| Binary | Host | Zone | Status | State |

+------------------+-------------+------+---------+-------+

| cinder-backup | storage | nova | enabled | up |

| cinder-scheduler | controller | nova | enabled | up |

| cinder-volume | controller | nova | enabled | down |

| cinder-volume | storage@hdd | nova | enabled | up |

| cinder-volume | storage@lvm | nova | enabled | up |

+------------------+-------------+------+---------+-------+

中间那个显示为

down更改数据库把它删除掉,但是在数据库中删除掉这条记录之后创建卷也会同样出现上面的报错信息。

[root@storage cinder]# cat /etc/cinder/cinder.conf

[DEFAULT]

rpc_backend = rabbit

auth_strategy = keystone

my_ip = 10.4.7.240

enabled_backends = lvm,hdd

glance_api_servers = http://controller:9292

[BACKEND]

[BRCD_FABRIC_EXAMPLE]

[CISCO_FABRIC_EXAMPLE]

[COORDINATION]

[FC-ZONE-MANAGER]

[KEYMGR]

[cors]

[cors.subdomain]

[database]

connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = cinder

[matchmaker_redis]

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

[oslo_messaging_amqp]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = RABBIT_PASS

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[oslo_versionedobjects]

[ssl]

[lvm]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = lvmg

iscsi_protocol = iscsi

iscsi_helper = lioadm

# 在下面新添加标识

volume_backend_name = lvm

[hdd]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = hdd-group

iscsi_protocol = iscsi

iscsi_helper = lioadm

# 在下面新添加标识

volume_backend_name = hdd

# dashboard 中卷类型的键值对 volume_backend_name <====> lvm

# volume_backend_name <====> hdd

systemctl restart openstack-cinder-volume.service

4.7 启动服务

systemctl enable openstack-cinder-volume.service target.service

systemctl start openstack-cinder-volume.service target.service

4.8 验证

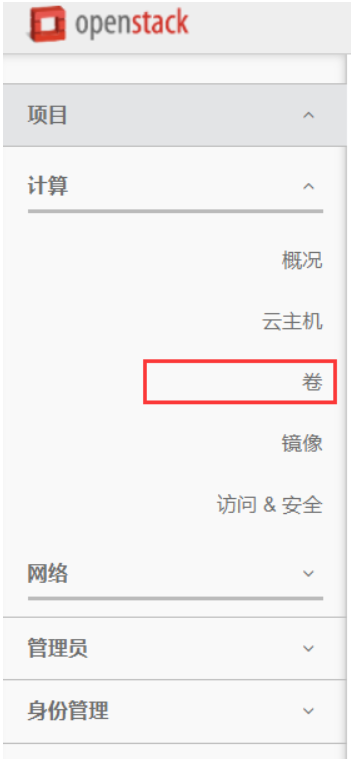

多出了卷的选项。

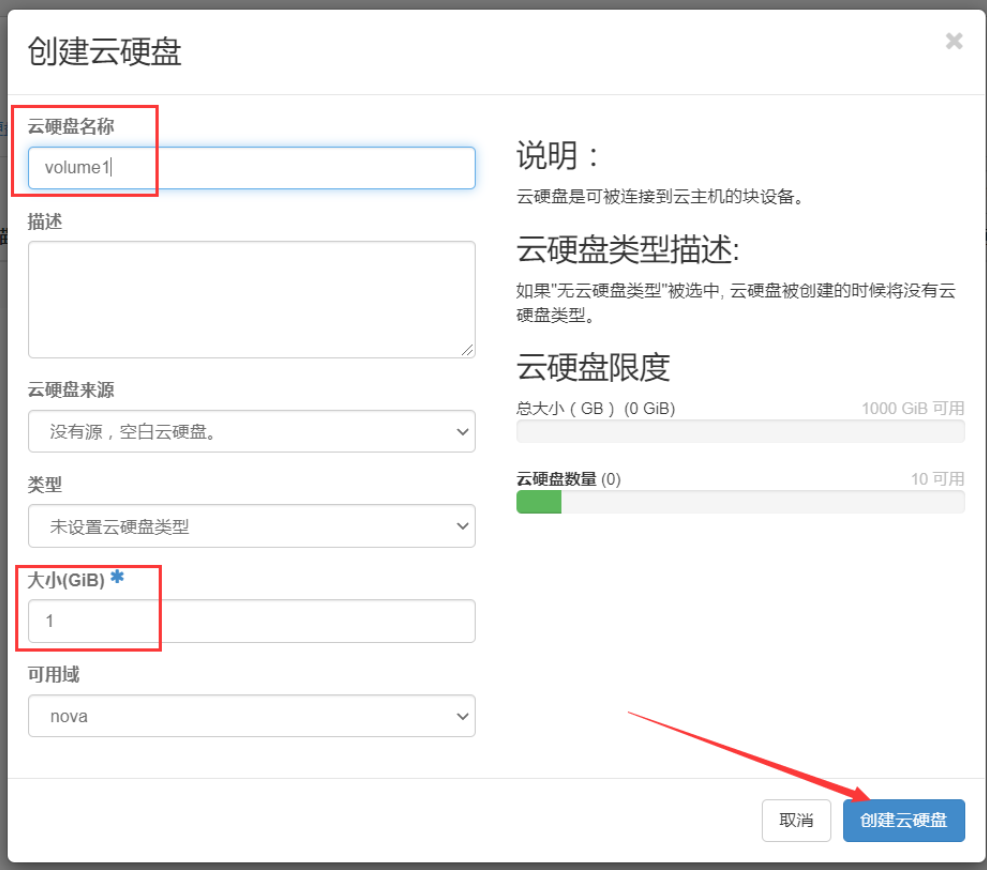

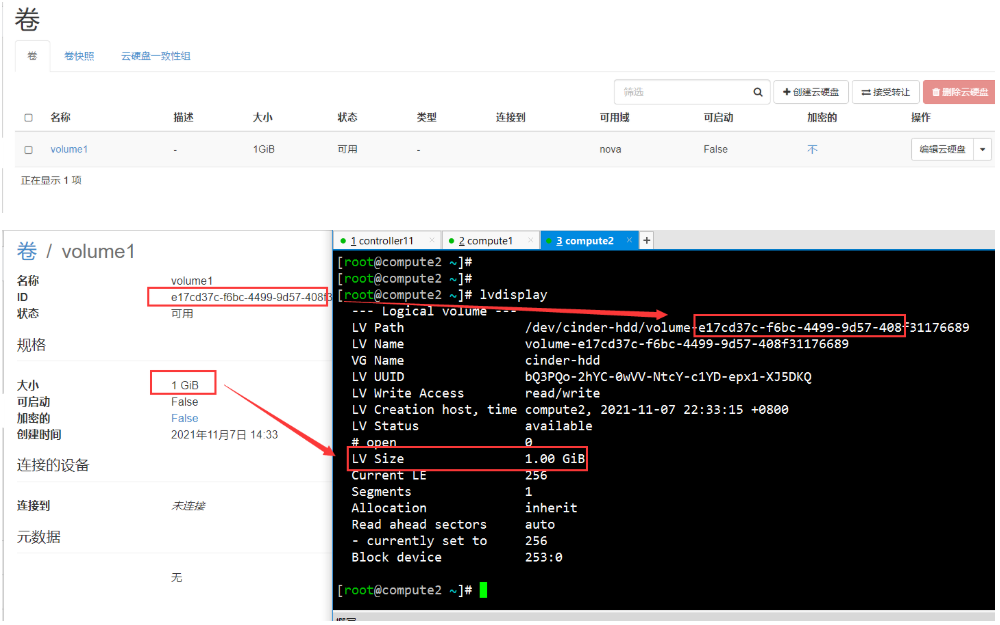

a.创建卷

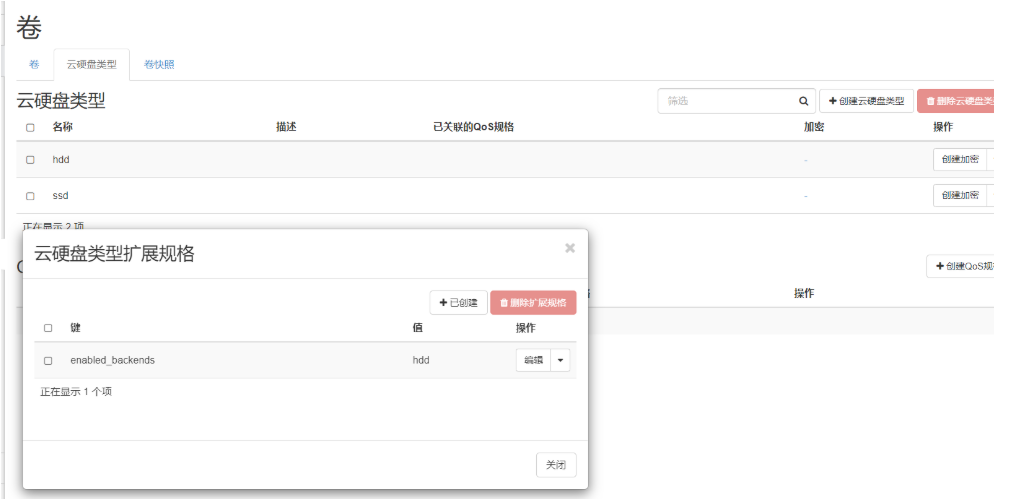

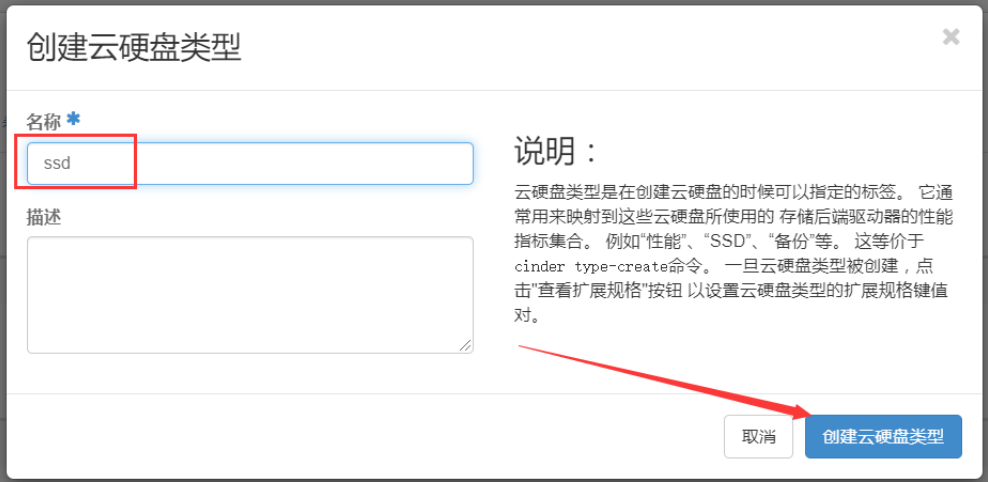

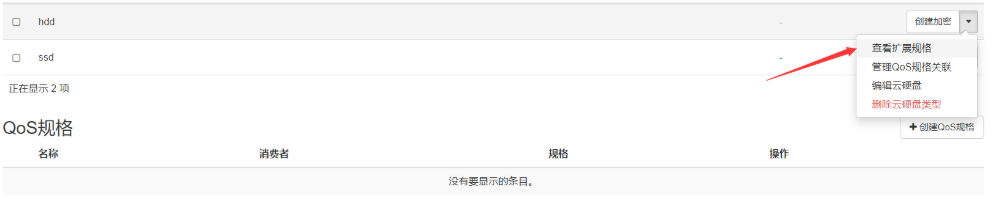

4.9 创建卷类型

通过创建卷类型可以让创建卷的时候指定选用哪种存储方式的的卷。

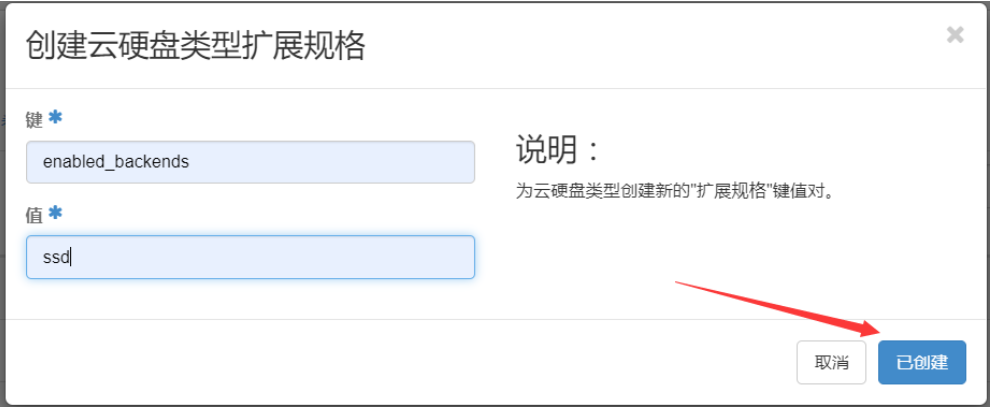

绑定真实属性

这里的键值对与enabled_backends = ssd,hdd是相对应的。这样可以控制创建的卷在哪个vg中。