一、数据集说明

使用的数据集是FER2013

kaggle FER2013https://www.kaggle.com/c/challenges-in-representation-learning-facial-expression-recognition-challenge/data

该数据集官方的下载链接目前失效了,可通过这个链接下载:https://www.kaggle.com/shawon10/facial-expression-detection-cnn/data?select=fer2013.csv

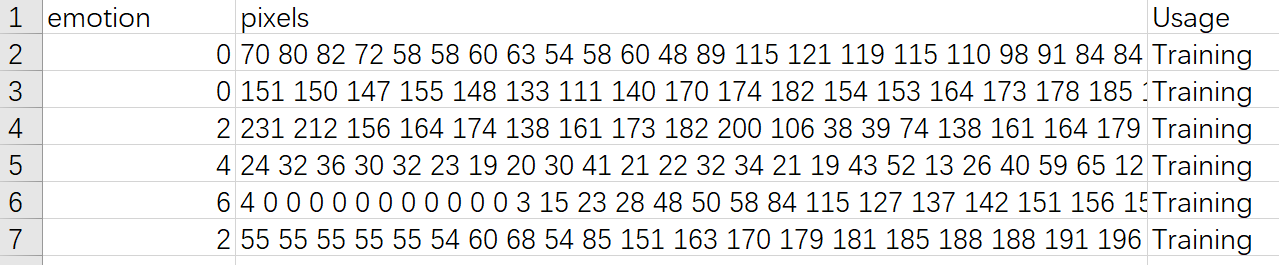

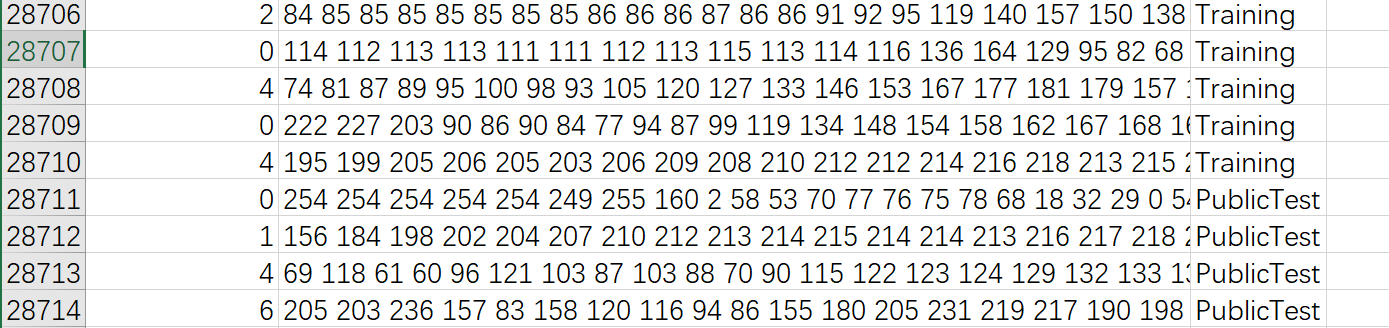

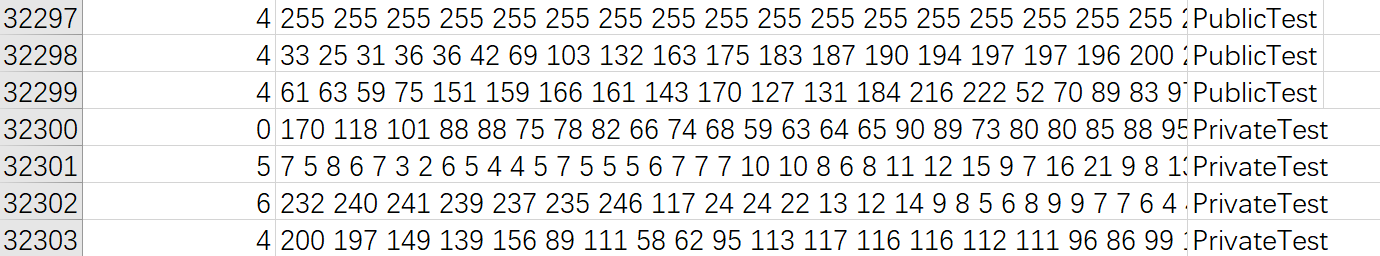

FER2013数据集下载下来是一个总 3w5k 行左右的csv文件:

......

......

第一列emotion是图像标签,即 y:[0, 6]。

分别代表7种emotion:0 - ‘angry’(4953),1 - ‘disgusted’(547),2 - ‘feaful’(5121),3 - ‘happy’(8989),4 - ‘sad’(6077),5 - ‘surprised’(4002),6 - ‘neutral’(6198)(数据集不是那么的平衡)

第二列是人脸图像的灰度像素值:[0, 255],大小是48*48

第三列是图像用途分类。根据第三列将图像分为 训练集(training),验证集(public test),测试集(private test)

二、预处理数据

读数据:

1 importpandas as pd 2 importnumpy as np 3 4 data_path = "../DATA/fer2013.csv" 5 df = pd.read_csv(data_path)

其中pixel列是一个字符串,将pixel列转为 int型 的list,表示像素灰度值

1 df['pixels'] = df['pixels'].str.split(' ').apply(lambda x: [int(i) for i in x])

在此步直接将pixel reshape成48*48的:

df['pixels'] = df['pixels'].str.split(' ').apply(lambda x: np.array([int(i) for i in x]).reshape(48,48))

注:后训练模型时发现不支持int64型数据,所以应该转为np.unit8,如下:

df['pixels'] = df['pixels'].str.split(' ').apply(lambda x: np.array([np.uint8(i) for i in x]).reshape(48,48))

将FER2013.csv根据用途(usage)分为train、val、test

分完后记得把原来的dataframe的index重设,否则val、test的dataframe序号不连续,后面y_val转成torch.LongTensor时会报错

1 defdevide_x_y(my_df): 2 return my_df['pixels'].reset_index(drop=True), my_df['emotion'].reset_index(drop=True) 3 4 #分别筛选出training、publictest、privatetest对应的行 5 training = df['Usage']=="Training" 6 publicTest = df['Usage'] == "PublicTest" 7 privateTest = df["Usage"] == "PrivateTest" 8 9 #读取对应行的数据 10 train =df[training] 11 public_t =df[publicTest] 12 private_t =df[privateTest] 13 14 #分为 X 和 y 15 train_x, train_y =devide_x_y(train) 16 val_x, val_y =devide_x_y(public_t) 17 test_x, test_y = devide_x_y(private_t)

分完后,各数据集的行数如下:

train:28709

val:3589

test:3589

len(train_x[0])每个图片长度为 2304 --> 48 * 48

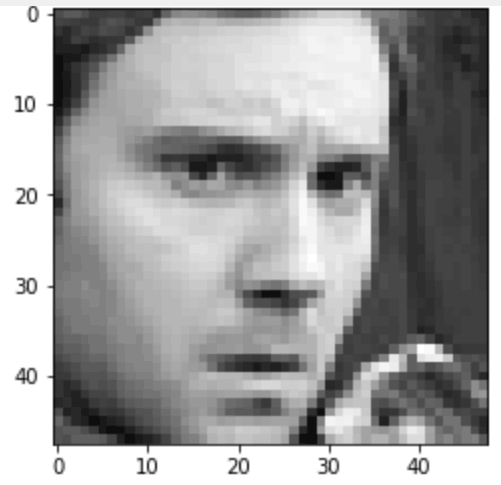

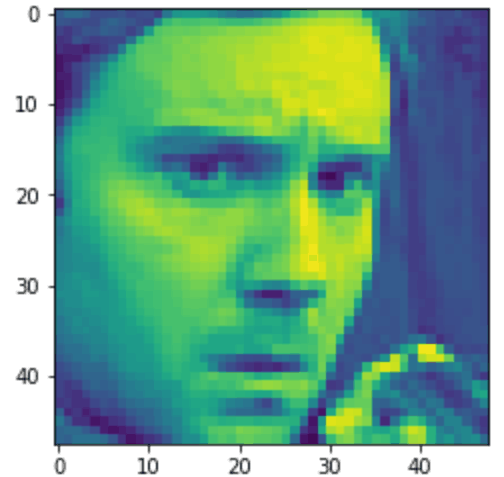

画一张出来看一下

1 importmatplotlib.pyplot as plt 2 3 img_test = train_x[0].reshape(48,48) 4 plt.figure() 5 plt.imshow(img_test,cmap='gray') 6 plt.show()

显示如下:

注:如果画图时没有注明是灰度图:cmap=gray,则会显示如下:

可以看到,这个48*48的图的画质不太清晰,马赛克略有点严重

三、制作数据集

1 importtorchvision.transforms as transforms 2 from torch.utils.data importDataset, DataLoader 3 importtorch 4 5 train_transform =transforms.Compose([ 6 transforms.ToPILImage(), 7 transforms.RandomHorizontalFlip(), 8 transforms.RandomRotation(5), 9 transforms.ToTensor(), 10 ]) 11 12 test_transform =transforms.Compose([ 13 transforms.ToPILImage(), 14 transforms.ToTensor(), 15 ]) 16 17 classMicroExpreDataset(Dataset): 18 def __init__(self, x, y=None, transform=None): 19 self.x =x 20 self.y =y 21 if y is notNone: 22 #label needs to be a LongTensor 23 self.y =torch.LongTensor(y) 24 self.transform =transform 25 26 def __len__(self): 27 returnlen(self.x) 28 29 def __getitem__(self, index): 30 X =self.x[index] 31 if self.transform is notNone: 32 X =self.transform(X) 33 if self.y is notNone: 34 Y =self.y[index] 35 returnX, Y 36 else: returnX 37 38 39 batch_size = 128 40 train_set =MicroExpreDataset(train_x, train_y, train_transform) 41 val_set =MicroExpreDataset(val_x, val_y, test_transform) 42 43 train_loader = DataLoader(train_set, batch_size=batch_size, shuffle=True) 44 val_loader = DataLoader(val_set, batch_size=batch_size, shuffle=False)

四、设置模型

1 importtorch.nn as nn 2 3 classClassifier(nn.Module): 4 def __init__(self): 5 #super继承父类 6 super(Classifier, self).__init__() 7 8 #conv2d( in_channels, out_channels, kernel_size, stide, padding) 9 #input shape: [1, 48, 48] 10 self.cnn =nn.Sequential( 11 nn.Conv2d(1, 64, 2, 1, 1), #[64, 49, 49] 12 nn.BatchNorm2d(64), 13 nn.ReLU(), 14 nn.MaxPool2d(2, 2, 0), #[64, 25, 25] 15 16 nn.Conv2d(64, 128, 2, 1, 1), #[128, 26, 26] 17 nn.BatchNorm2d(128), 18 nn.ReLU(), 19 nn.MaxPool2d(2,2,0), #[128, 13, 13] 20 21 nn.Conv2d(128, 256, 3, 1, 1), #[256, 13, 13] 22 nn.BatchNorm2d(256), 23 nn.ReLU(), 24 nn.MaxPool2d(2, 2, 0), #[256, 7, 7] 25 26 nn.Conv2d(256, 256, 3, 1, 1), #[256, 7, 7] 27 nn.BatchNorm2d(256), 28 nn.ReLU(), 29 nn.MaxPool2d(2, 2, 0), #[256, 4, 4] 30 ) 31 32 self.fc =nn.Sequential( 33 nn.Linear(2304, 512), 34 nn.ReLU(), 35 nn.Linear(512, 256), 36 nn.Linear(256, 7) 37 ) 38 39 defforward(self, x): 40 out =self.cnn(x) 41 out = out.view(out.size()[0], -1) 42 return self.fc(out)

这个模型注释里面,我算maxpool后的size算错了。我以为尽管maxpool的padding是0,但是当边界不足时,也会自动补0。但事实是好像并没有。。

注:

通过下面这个代码可以打印模型各层输入和输出

1 defshow_summary(my_model, my_input_size): 2 from collections importOrderedDict 3 importpandas as pd 4 importnumpy as np 5 6 importtorch 7 from torch.autograd importVariable 8 importtorch.nn.functional as F 9 from torch importnn 10 11 12 defget_names_dict(model): 13 """ 14 Recursive walk to get names including path 15 """ 16 names ={} 17 def _get_names(module, parent_name=''): 18 for key, module inmodule.named_children(): 19 name = parent_name + '.' + key if parent_name elsekey 20 names[name]=module 21 ifisinstance(module, torch.nn.Module): 22 _get_names(module, parent_name=name) 23 _get_names(model) 24 returnnames 25 26 27 def torch_summarize_df(input_size, model, weights=False, input_shape=True, nb_trainable=False): 28 """ 29 Summarizes torch model by showing trainable parameters and weights. 30 31 author: wassname 32 url: https://gist.github.com/wassname/0fb8f95e4272e6bdd27bd7df386716b7 33 license: MIT 34 35 Modified from: 36 - https://github.com/pytorch/pytorch/issues/2001#issuecomment-313735757 37 - https://gist.github.com/wassname/0fb8f95e4272e6bdd27bd7df386716b7/ 38 39 Usage: 40 import torchvision.models as models 41 model = models.alexnet() 42 df = torch_summarize_df(input_size=(3, 224,224), model=model) 43 print(df) 44 45 # name class_name input_shape output_shape nb_params 46 # 1 features=>0 Conv2d (-1, 3, 224, 224) (-1, 64, 55, 55) 23296#(3*11*11+1)*64 47 # 2 features=>1 ReLU (-1, 64, 55, 55) (-1, 64, 55, 55) 0 48 # ... 49 """ 50 51 defregister_hook(module): 52 defhook(module, input, output): 53 name = '' 54 for key, item innames.items(): 55 if item ==module: 56 name =key 57 #<class 'torch.nn.modules.conv.Conv2d'> 58 class_name = str(module.__class__).split('.')[-1].split("'")[0] 59 module_idx =len(summary) 60 61 m_key = module_idx + 1 62 63 summary[m_key] =OrderedDict() 64 summary[m_key]['name'] =name 65 summary[m_key]['class_name'] =class_name 66 ifinput_shape: 67 summary[m_key][ 68 'input_shape'] = (-1, ) + tuple(input[0].size())[1:] 69 summary[m_key]['output_shape'] = (-1, ) + tuple(output.size())[1:] 70 ifweights: 71 summary[m_key]['weights'] =list( 72 [tuple(p.size()) for p inmodule.parameters()]) 73 74 #summary[m_key]['trainable'] = any([p.requires_grad for p in module.parameters()]) 75 ifnb_trainable: 76 params_trainable = sum([torch.LongTensor(list(p.size())).prod() for p in module.parameters() ifp.requires_grad]) 77 summary[m_key]['nb_trainable'] =params_trainable 78 params = sum([torch.LongTensor(list(p.size())).prod() for p inmodule.parameters()]) 79 summary[m_key]['nb_params'] =params 80 81 82 if not isinstance(module, nn.Sequential) and83 not isinstance(module, nn.ModuleList) and84 not (module ==model): 85 hooks.append(module.register_forward_hook(hook)) 86 87 #Names are stored in parent and path+name is unique not the name 88 names =get_names_dict(model) 89 90 #check if there are multiple inputs to the network 91 ifisinstance(input_size[0], (list, tuple)): 92 x = [Variable(torch.rand(1, *in_size)) for in_size ininput_size] 93 else: 94 x = Variable(torch.rand(1, *input_size)) 95 96 ifnext(model.parameters()).is_cuda: 97 x =x.cuda() 98 99 #create properties 100 summary =OrderedDict() 101 hooks =[] 102 103 #register hook 104 model.apply(register_hook) 105 106 #make a forward pass 107 model(x) 108 109 #remove these hooks 110 for h inhooks: 111 h.remove() 112 113 #make dataframe 114 df_summary = pd.DataFrame.from_dict(summary, orient='index') 115 116 returndf_summary 117 118 119 #Test on alexnet 120 importtorchvision.models as models 121 df = torch_summarize_df(input_size=my_input_size, model=my_model) 122 print(df)

实际的模型输入输出应该是这样

name class_name input_shape output_shape nb_params 1 cnn.0 Conv2d (-1, 1, 48, 48) (-1, 64, 49, 49) tensor(320) 2 cnn.1 BatchNorm2d (-1, 64, 49, 49) (-1, 64, 49, 49) tensor(128) 3 cnn.2 ReLU (-1, 64, 49, 49) (-1, 64, 49, 49) 0 4 cnn.3 MaxPool2d (-1, 64, 49, 49) (-1, 64, 24, 24) 0 5 cnn.4 Conv2d (-1, 64, 24, 24) (-1, 128, 25, 25) tensor(32896) 6 cnn.5 BatchNorm2d (-1, 128, 25, 25) (-1, 128, 25, 25) tensor(256) 7 cnn.6 ReLU (-1, 128, 25, 25) (-1, 128, 25, 25) 0 8 cnn.7 MaxPool2d (-1, 128, 25, 25) (-1, 128, 12, 12) 0 9 cnn.8 Conv2d (-1, 128, 12, 12) (-1, 256, 12, 12) tensor(295168) 10 cnn.9 BatchNorm2d (-1, 256, 12, 12) (-1, 256, 12, 12) tensor(512) 11 cnn.10 ReLU (-1, 256, 12, 12) (-1, 256, 12, 12) 0 12 cnn.11 MaxPool2d (-1, 256, 12, 12) (-1, 256, 6, 6) 0 13 cnn.12 Conv2d (-1, 256, 6, 6) (-1, 256, 6, 6) tensor(590080) 14 cnn.13 BatchNorm2d (-1, 256, 6, 6) (-1, 256, 6, 6) tensor(512) 15 cnn.14 ReLU (-1, 256, 6, 6) (-1, 256, 6, 6) 0 16 cnn.15 MaxPool2d (-1, 256, 6, 6) (-1, 256, 3, 3) 0 17 fc.0 Linear (-1, 2304) (-1, 512) tensor(1180160) 18 fc.1 Dropout (-1, 512) (-1, 512) 0 19 fc.2 ReLU (-1, 512) (-1, 512) 0 20 fc.3 Linear (-1, 512) (-1, 256) tensor(131328) 21 fc.4 Dropout (-1, 256) (-1, 256) 0 22 fc.5 Linear (-1, 256) (-1, 7) tensor(1799)

五、训练

因为工作站有两张卡,仅一张可用,需打印看一下可用的那张的编号

torch.cuda.get_device_name(0)

训练30个epoch

1 importos 2 importtime 3 4 os.environ["CUDA_VISIBLE_DEVICES"] = '0' 5 model =Classifier().cuda() 6 7 #classification task, so use crossEntropyLoss 8 loss =nn.CrossEntropyLoss() 9 #optimizer use Adam 10 optimizer = torch.optim.Adam(model.parameters(), lr=0.001) 11 num_epoch = 30 12 13 for epoch inrange(num_epoch): 14 epoch_start_time =time.time() 15 train_acc = 0.0 16 train_loss = 0.0 17 val_acc = 0.0 18 val_loss = 0.0 19 20 #open train mode 21 model.train() 22 for i, data inenumerate(train_loader): 23 #set gradient to zero, otherwise it will be added each time 24 optimizer.zero_grad() 25 26 #train model to get the predict possibility. Actually, it is to call the forward function 27 train_pred = model(data[0].cuda()) #data[0] is x 28 batch_loss = loss(train_pred, data[1].cuda()) #data[1] is y 29 30 #use backward to calculate the gradient for each parameter 31 batch_loss.backward() 32 #update the parameters by optimizer 33 optimizer.step() 34 35 train_acc += np.sum(np.argmax(train_pred.cpu().data.numpy(), axis=1) == data[1].numpy()) 36 train_loss +=batch_loss.item() 37 38 #turn model to eval mode 39 model.eval() 40 with torch.no_grad(): 41 for i, data inenumerate(val_loader): 42 val_pred =model(data[0].cuda()) 43 batch_loss = loss(val_pred, data[1].cuda()) 44 45 val_acc += np.sum(np.argmax(val_pred.cpu().data.numpy(), axis=1) == data[1].numpy()) 46 val_loss +=batch_loss.item() 47 48 #print out the results 49 print('[%03d/%03d] %2.2f sec(s) Train Acc: %3.6f Loss: %3.6f | Val Acc: %3.6f loss: %3.6f' %50 (epoch + 1, num_epoch, time.time()-epoch_start_time, 51 train_acc/train_set.__len__(), train_loss/train_set.__len__(), val_acc/val_set.__len__(), val_loss/val_set.__len__())) 52

训练结果val acc大概 0.6左右

[001/030] 10.69 sec(s) Train Acc: 0.309450 Loss: 0.013407 | Val Acc: 0.404848 loss: 0.012436[002/030] 11.58 sec(s) Train Acc: 0.467449 Loss: 0.010781 | Val Acc: 0.494845 loss: 0.010486[003/030] 10.64 sec(s) Train Acc: 0.522728 Loss: 0.009736 | Val Acc: 0.533018 loss: 0.009739[004/030] 10.57 sec(s) Train Acc: 0.552440 Loss: 0.009222 | Val Acc: 0.550850 loss: 0.009390[005/030] 10.58 sec(s) Train Acc: 0.579087 Loss: 0.008683 | Val Acc: 0.564224 loss: 0.009504[006/030] 10.59 sec(s) Train Acc: 0.598558 Loss: 0.008282 | Val Acc: 0.566732 loss: 0.009202[007/030] 10.62 sec(s) Train Acc: 0.613710 Loss: 0.007971 | Val Acc: 0.580106 loss: 0.009015[008/030] 10.60 sec(s) Train Acc: 0.636421 Loss: 0.007589 | Val Acc: 0.591808 loss: 0.009277[009/030] 10.61 sec(s) Train Acc: 0.647950 Loss: 0.007321 | Val Acc: 0.580663 loss: 0.009388[010/030] 10.64 sec(s) Train Acc: 0.664635 Loss: 0.007020 | Val Acc: 0.589301 loss: 0.008910[011/030] 10.93 sec(s) Train Acc: 0.680449 Loss: 0.006731 | Val Acc: 0.600446 loss: 0.009198[012/030] 10.48 sec(s) Train Acc: 0.695531 Loss: 0.006364 | Val Acc: 0.610198 loss: 0.009257[013/030] 10.46 sec(s) Train Acc: 0.711972 Loss: 0.006073 | Val Acc: 0.610198 loss: 0.008954[014/030] 11.79 sec(s) Train Acc: 0.726636 Loss: 0.005780 | Val Acc: 0.609641 loss: 0.008695[015/030] 10.62 sec(s) Train Acc: 0.741579 Loss: 0.005495 | Val Acc: 0.604068 loss: 0.009682[016/030] 10.55 sec(s) Train Acc: 0.760633 Loss: 0.005133 | Val Acc: 0.596266 loss: 0.009784[017/030] 10.53 sec(s) Train Acc: 0.771256 Loss: 0.004891 | Val Acc: 0.613820 loss: 0.009530[018/030] 10.51 sec(s) Train Acc: 0.785956 Loss: 0.004585 | Val Acc: 0.609083 loss: 0.009737[019/030] 10.49 sec(s) Train Acc: 0.798460 Loss: 0.004316 | Val Acc: 0.607412 loss: 0.010229[020/030] 10.49 sec(s) Train Acc: 0.812150 Loss: 0.003993 | Val Acc: 0.605461 loss: 0.010734[021/030] 10.47 sec(s) Train Acc: 0.822913 Loss: 0.003782 | Val Acc: 0.576484 loss: 0.011127[022/030] 10.53 sec(s) Train Acc: 0.836184 Loss: 0.003529 | Val Acc: 0.594595 loss: 0.012074[023/030] 10.55 sec(s) Train Acc: 0.846459 Loss: 0.003334 | Val Acc: 0.604904 loss: 0.012387[024/030] 10.48 sec(s) Train Acc: 0.857780 Loss: 0.003108 | Val Acc: 0.612427 loss: 0.011957[025/030] 10.61 sec(s) Train Acc: 0.866070 Loss: 0.002917 | Val Acc: 0.598495 loss: 0.012693[026/030] 10.63 sec(s) Train Acc: 0.869100 Loss: 0.002839 | Val Acc: 0.599053 loss: 0.013177[027/030] 10.60 sec(s) Train Acc: 0.883800 Loss: 0.002563 | Val Acc: 0.608247 loss: 0.012588[028/030] 10.58 sec(s) Train Acc: 0.889094 Loss: 0.002421 | Val Acc: 0.609083 loss: 0.012604[029/030] 10.57 sec(s) Train Acc: 0.889338 Loss: 0.002420 | Val Acc: 0.606297 loss: 0.014425[030/030] 10.58 sec(s) Train Acc: 0.898743 Loss: 0.002211 | Val Acc: 0.600446 loss: 0.013294

六、调优模型

1) 在FC层增加dropout

1 self.fc =nn.Sequential( 2 nn.Linear(2304, 512), 3 nn.Dropout(0.3), 4 nn.ReLU(), 5 nn.Linear(512, 256), 6 nn.Dropout(0.3), 7 nn.Linear(256, 7) 8 )

效果没有显著提升,val acc大概还是0.6左右

[001/030] 11.63 sec(s) Train Acc: 0.307534 Loss: 0.013370 | Val Acc: 0.412928 loss: 0.012034[002/030] 10.04 sec(s) Train Acc: 0.445191 Loss: 0.011217 | Val Acc: 0.495403 loss: 0.010660[003/030] 11.71 sec(s) Train Acc: 0.490961 Loss: 0.010363 | Val Acc: 0.514349 loss: 0.010133[004/030] 10.32 sec(s) Train Acc: 0.509805 Loss: 0.009945 | Val Acc: 0.544999 loss: 0.009528[005/030] 11.72 sec(s) Train Acc: 0.536452 Loss: 0.009538 | Val Acc: 0.553636 loss: 0.009448[006/030] 10.73 sec(s) Train Acc: 0.552823 Loss: 0.009249 | Val Acc: 0.553079 loss: 0.009476[007/030] 11.18 sec(s) Train Acc: 0.563134 Loss: 0.009051 | Val Acc: 0.551407 loss: 0.009214[008/030] 10.37 sec(s) Train Acc: 0.575882 Loss: 0.008729 | Val Acc: 0.559487 loss: 0.009178[009/030] 10.68 sec(s) Train Acc: 0.592149 Loss: 0.008511 | Val Acc: 0.585678 loss: 0.008921[010/030] 10.49 sec(s) Train Acc: 0.600683 Loss: 0.008264 | Val Acc: 0.570354 loss: 0.009281[011/030] 10.57 sec(s) Train Acc: 0.611725 Loss: 0.008074 | Val Acc: 0.586236 loss: 0.008738[012/030] 10.60 sec(s) Train Acc: 0.625727 Loss: 0.007824 | Val Acc: 0.597660 loss: 0.008503[013/030] 10.58 sec(s) Train Acc: 0.635306 Loss: 0.007631 | Val Acc: 0.583171 loss: 0.008948[014/030] 10.60 sec(s) Train Acc: 0.644606 Loss: 0.007423 | Val Acc: 0.583728 loss: 0.008670[015/030] 11.75 sec(s) Train Acc: 0.655300 Loss: 0.007204 | Val Acc: 0.583449 loss: 0.009537[016/030] 11.72 sec(s) Train Acc: 0.668536 Loss: 0.006962 | Val Acc: 0.613820 loss: 0.008576[017/030] 11.78 sec(s) Train Acc: 0.677383 Loss: 0.006736 | Val Acc: 0.582056 loss: 0.010016[018/030] 10.39 sec(s) Train Acc: 0.686753 Loss: 0.006568 | Val Acc: 0.604068 loss: 0.009147[019/030] 10.35 sec(s) Train Acc: 0.696402 Loss: 0.006376 | Val Acc: 0.586514 loss: 0.009409[020/030] 10.37 sec(s) Train Acc: 0.701870 Loss: 0.006202 | Val Acc: 0.602675 loss: 0.009220[021/030] 10.39 sec(s) Train Acc: 0.714758 Loss: 0.005984 | Val Acc: 0.595152 loss: 0.009192[022/030] 10.44 sec(s) Train Acc: 0.722178 Loss: 0.005780 | Val Acc: 0.601282 loss: 0.009065[023/030] 10.65 sec(s) Train Acc: 0.735623 Loss: 0.005579 | Val Acc: 0.621064 loss: 0.009224[024/030] 10.46 sec(s) Train Acc: 0.743809 Loss: 0.005383 | Val Acc: 0.618278 loss: 0.010290[025/030] 10.44 sec(s) Train Acc: 0.752447 Loss: 0.005239 | Val Acc: 0.616606 loss: 0.010834[026/030] 10.32 sec(s) Train Acc: 0.758403 Loss: 0.005108 | Val Acc: 0.589858 loss: 0.009424[027/030] 10.32 sec(s) Train Acc: 0.766728 Loss: 0.004936 | Val Acc: 0.608526 loss: 0.010024[028/030] 10.33 sec(s) Train Acc: 0.771814 Loss: 0.004792 | Val Acc: 0.609083 loss: 0.010309[029/030] 10.29 sec(s) Train Acc: 0.780487 Loss: 0.004629 | Val Acc: 0.600167 loss: 0.011290[030/030] 10.26 sec(s) Train Acc: 0.794106 Loss: 0.004395 | Val Acc: 0.606854 loss: 0.012611

2)在第一层的maxpool中stride设成1,并周围补一圈0 --> 不缩小图像

因为48*48这个图还是比较马赛克化的

这样操作后,模型从最后一层conv后输出3*3变成了6*6,模型具体结构如下:

name class_name input_shape output_shape nb_params 1 cnn.0 Conv2d (-1, 1, 48, 48) (-1, 64, 49, 49) tensor(320) 2 cnn.1 BatchNorm2d (-1, 64, 49, 49) (-1, 64, 49, 49) tensor(128) 3 cnn.2 ReLU (-1, 64, 49, 49) (-1, 64, 49, 49) 0 4 cnn.3 MaxPool2d (-1, 64, 49, 49) (-1, 64, 50, 50) 0 5 cnn.4 Conv2d (-1, 64, 50, 50) (-1, 128, 51, 51) tensor(32896) 6 cnn.5 BatchNorm2d (-1, 128, 51, 51) (-1, 128, 51, 51) tensor(256) 7 cnn.6 ReLU (-1, 128, 51, 51) (-1, 128, 51, 51) 0 8 cnn.7 MaxPool2d (-1, 128, 51, 51) (-1, 128, 25, 25) 0 9 cnn.8 Conv2d (-1, 128, 25, 25) (-1, 256, 25, 25) tensor(295168) 10 cnn.9 BatchNorm2d (-1, 256, 25, 25) (-1, 256, 25, 25) tensor(512) 11 cnn.10 ReLU (-1, 256, 25, 25) (-1, 256, 25, 25) 0 12 cnn.11 MaxPool2d (-1, 256, 25, 25) (-1, 256, 12, 12) 0 13 cnn.12 Conv2d (-1, 256, 12, 12) (-1, 256, 12, 12) tensor(590080) 14 cnn.13 BatchNorm2d (-1, 256, 12, 12) (-1, 256, 12, 12) tensor(512) 15 cnn.14 ReLU (-1, 256, 12, 12) (-1, 256, 12, 12) 0 16 cnn.15 MaxPool2d (-1, 256, 12, 12) (-1, 256, 6, 6) 0 17 fc.0 Linear (-1, 9216) (-1, 512) tensor(4719104) 18 fc.1 Dropout (-1, 512) (-1, 512) 0 19 fc.2 ReLU (-1, 512) (-1, 512) 0 20 fc.3 Linear (-1, 512) (-1, 256) tensor(131328) 21 fc.4 Dropout (-1, 256) (-1, 256) 0 22 fc.5 Linear (-1, 256) (-1, 7) tensor(1799)

但训练结果没有提升,反而看起来略有点下降了。可能因为这样引入了更多噪音

[001/030] 15.70 sec(s) Train Acc: 0.254276 Loss: 0.014978 | Val Acc: 0.329061 loss: 0.013547[002/030] 14.21 sec(s) Train Acc: 0.359678 Loss: 0.012589 | Val Acc: 0.418222 loss: 0.011816[003/030] 14.17 sec(s) Train Acc: 0.417883 Loss: 0.011663 | Val Acc: 0.472276 loss: 0.011007[004/030] 14.30 sec(s) Train Acc: 0.451844 Loss: 0.011097 | Val Acc: 0.479521 loss: 0.010857[005/030] 14.30 sec(s) Train Acc: 0.461388 Loss: 0.010875 | Val Acc: 0.509334 loss: 0.010366[006/030] 14.32 sec(s) Train Acc: 0.484726 Loss: 0.010492 | Val Acc: 0.522708 loss: 0.010089[007/030] 14.32 sec(s) Train Acc: 0.500644 Loss: 0.010178 | Val Acc: 0.533296 loss: 0.009900[008/030] 14.31 sec(s) Train Acc: 0.509979 Loss: 0.010009 | Val Acc: 0.547785 loss: 0.009626[009/030] 14.32 sec(s) Train Acc: 0.522937 Loss: 0.009812 | Val Acc: 0.543327 loss: 0.009497[010/030] 14.32 sec(s) Train Acc: 0.529416 Loss: 0.009635 | Val Acc: 0.565060 loss: 0.009770[011/030] 14.31 sec(s) Train Acc: 0.537810 Loss: 0.009511 | Val Acc: 0.561995 loss: 0.009458[012/030] 14.33 sec(s) Train Acc: 0.544115 Loss: 0.009311 | Val Acc: 0.538033 loss: 0.009728[013/030] 14.32 sec(s) Train Acc: 0.552928 Loss: 0.009205 | Val Acc: 0.560323 loss: 0.009435[014/030] 14.32 sec(s) Train Acc: 0.561218 Loss: 0.009048 | Val Acc: 0.573976 loss: 0.009330[015/030] 14.32 sec(s) Train Acc: 0.568254 Loss: 0.008903 | Val Acc: 0.573976 loss: 0.009089[016/030] 14.35 sec(s) Train Acc: 0.575743 Loss: 0.008777 | Val Acc: 0.571468 loss: 0.009189[017/030] 14.34 sec(s) Train Acc: 0.581838 Loss: 0.008667 | Val Acc: 0.567846 loss: 0.009613[018/030] 14.32 sec(s) Train Acc: 0.589954 Loss: 0.008496 | Val Acc: 0.578991 loss: 0.009191[019/030] 14.32 sec(s) Train Acc: 0.593124 Loss: 0.008375 | Val Acc: 0.590415 loss: 0.009168[020/030] 14.32 sec(s) Train Acc: 0.603191 Loss: 0.008238 | Val Acc: 0.598217 loss: 0.009239[021/030] 14.32 sec(s) Train Acc: 0.609704 Loss: 0.008045 | Val Acc: 0.598774 loss: 0.008932[022/030] 14.32 sec(s) Train Acc: 0.613327 Loss: 0.008028 | Val Acc: 0.598774 loss: 0.008821[023/030] 14.32 sec(s) Train Acc: 0.623881 Loss: 0.007821 | Val Acc: 0.598495 loss: 0.008917[024/030] 14.32 sec(s) Train Acc: 0.631056 Loss: 0.007649 | Val Acc: 0.599053 loss: 0.008865[025/030] 14.34 sec(s) Train Acc: 0.637082 Loss: 0.007531 | Val Acc: 0.597660 loss: 0.008953[026/030] 14.32 sec(s) Train Acc: 0.639834 Loss: 0.007439 | Val Acc: 0.606576 loss: 0.009263[027/030] 14.31 sec(s) Train Acc: 0.652200 Loss: 0.007253 | Val Acc: 0.597381 loss: 0.008817[028/030] 14.30 sec(s) Train Acc: 0.657076 Loss: 0.007143 | Val Acc: 0.586236 loss: 0.009791[029/030] 14.31 sec(s) Train Acc: 0.662301 Loss: 0.007033 | Val Acc: 0.612984 loss: 0.009230[030/030] 14.30 sec(s) Train Acc: 0.672577 Loss: 0.006849 | Val Acc: 0.598495 loss: 0.009119

七、训练+验证一起重新训练一遍最优模型

这里还是用最开始的model

合并train,test

1 train_val_x = np.concatenate((train_x, val_x), axis=0) 2 train_val_y = np.concatenate((train_y, val_y), axis=0) 3 train_val_set =MicroExpreDataset(train_val_x, train_val_y, train_transform) 4 train_val_loader = DataLoader(train_val_set, batch_size=batch_size, shuffle=True)

用best_model进行训练

1 model_best =Classifier().cuda() 2 loss =nn.CrossEntropyLoss() 3 optimizer = torch.optim.Adam(model_best.parameters(), lr=0.001) 4 num_epoch = 30 5 6 for epoch inrange(num_epoch): 7 epoch_start_time =time.time() 8 train_acc = 0.0 9 train_loss = 0.0 10 11 model_best.train() 12 for i, data inenumerate(train_val_loader): 13 optimizer.zero_grad() 14 train_pred =model_best(data[0].cuda()) 15 batch_loss = loss(train_pred, data[1].cuda()) 16 batch_loss.backward() 17 optimizer.step() 18 19 train_acc += np.sum(np.argmax(train_pred.cpu().data.numpy(), axis=1) == data[1].numpy()) 20 train_loss+=batch_loss.item() 21 22 #print out the result 23 print('[%03d/%03d] %2.2f sec(s) Train Acc: %3.6f Loss: %3.6f' %24 (epoch + 1, num_epoch, time.time()-epoch_start_time, 25 train_acc/train_val_set.__len__(), train_loss/train_val_set.__len__()))

训练结果大概89%左右,但应该是有一点过拟合了

1 [001/030] 11.02 sec(s) Train Acc: 0.349867 Loss: 0.012808 2 [002/030] 10.87 sec(s) Train Acc: 0.494024 Loss: 0.010299 3 [003/030] 10.91 sec(s) Train Acc: 0.540529 Loss: 0.009427 4 [004/030] 10.97 sec(s) Train Acc: 0.573101 Loss: 0.008838 5 [005/030] 10.97 sec(s) Train Acc: 0.594774 Loss: 0.008422 6 [006/030] 10.97 sec(s) Train Acc: 0.610224 Loss: 0.008127 7 [007/030] 11.07 sec(s) Train Acc: 0.625735 Loss: 0.007769 8 [008/030] 11.06 sec(s) Train Acc: 0.643476 Loss: 0.007467 9 [009/030] 10.97 sec(s) Train Acc: 0.656604 Loss: 0.007177 10 [010/030] 10.99 sec(s) Train Acc: 0.675367 Loss: 0.006820 11 [011/030] 10.97 sec(s) Train Acc: 0.688928 Loss: 0.006606 12 [012/030] 10.96 sec(s) Train Acc: 0.708279 Loss: 0.006197 13 [013/030] 10.91 sec(s) Train Acc: 0.719549 Loss: 0.005955 14 [014/030] 10.98 sec(s) Train Acc: 0.726144 Loss: 0.005742 15 [015/030] 10.97 sec(s) Train Acc: 0.748746 Loss: 0.005355 16 [016/030] 10.96 sec(s) Train Acc: 0.768654 Loss: 0.004975 17 [017/030] 10.97 sec(s) Train Acc: 0.775714 Loss: 0.004779 18 [018/030] 10.99 sec(s) Train Acc: 0.795281 Loss: 0.004470 19 [019/030] 10.97 sec(s) Train Acc: 0.804694 Loss: 0.004222 20 [020/030] 10.98 sec(s) Train Acc: 0.818286 Loss: 0.003909 21 [021/030] 10.98 sec(s) Train Acc: 0.828720 Loss: 0.003722 22 [022/030] 10.97 sec(s) Train Acc: 0.837204 Loss: 0.003552 23 [023/030] 10.97 sec(s) Train Acc: 0.851167 Loss: 0.003269 24 [024/030] 10.98 sec(s) Train Acc: 0.857360 Loss: 0.003158 25 [025/030] 10.96 sec(s) Train Acc: 0.863057 Loss: 0.002980 26 [026/030] 10.99 sec(s) Train Acc: 0.874915 Loss: 0.002788 27 [027/030] 10.98 sec(s) Train Acc: 0.878166 Loss: 0.002646 28 [028/030] 10.98 sec(s) Train Acc: 0.886835 Loss: 0.002485 29 [029/030] 10.98 sec(s) Train Acc: 0.893771 Loss: 0.002362 30 [030/030] 10.98 sec(s) Train Acc: 0.896278 Loss: 0.002323

保存模型

torch.save(model_best, 'model_best.pkl')

注:

1)这里直接用全保存下来的model,读取再预测时会有一些问题。根据官方建议只保存训练参数,所以后来又存一下只有参数的模型供后面做demo用

torch.save(model_best.state_dict(),'model_best_para.pkl')

2)不论是保存的整个模型,还是只保存参数,最后再读取模型/参数时,都仍需要提供原模型结构的代码

八、测试

用测试集再算一遍看下acc到达多少

读取模型

#load model test_model = torch.load('model_best.pkl')

1 test_set =MicroExpreDataset(test_x, test_y, test_transform) 2 test_loader = DataLoader(test_set, batch_size=batch_size, shuffle=False) 3 4 test_model.eval() 5 prediction =[] 6 with torch.no_grad(): 7 for i, data inenumerate(test_loader): 8 test_pred =test_model(data[0].cuda()) 9 test_label = np.argmax(test_pred.cpu().data.numpy(), axis=1) 10 for y intest_label: 11 prediction.append(y) 12 13 test_acc = np.sum(prediction == test_y) / test_set.__len__() 14 test_acc 15 16 #test_acc= 0.62245

最后test acc = 0.622

合并了训练集和验证集后效果略提升了一些。

分析:

(1)训练数据集本身清晰度不够,可能细微表情变换较难识别出来。另外某些表情有一定相似性,例如生气、厌恶、恐惧等。

(2)微表情中某些类别样本不均衡,进一步导致难以识别。例如快乐表情准确率更高,而恐惧就很低。

补充:在网上看了另外一个博主的工作,他采用的数据增强的方法如下:

4.2 数据增强

为了防止网络过快地过拟合,可以人为的做一些图像变换,例如翻转,旋转,切割等。上述操作称为数据增强。数据操作还有另一大好处是扩大数据库的数据量,使得训练的网络鲁棒性更强。在本实验中,在训练阶段,我们采用随机切割44*44的图像,并将图像进行随机镜像,然后送入训练。在测试阶段,本文采用一种集成的方法来减少异常值。我们将图片在左上角,左下角,右上角,右下角,中心进行切割和并做镜像操作,这样的操作使得数据库扩大了10倍,再将这10张图片送入模型。然后将得到的概率取平均,最大的输出分类即为对应表情,这种方法有效地降低了分类错误。

其中图像变换我们也做了。值得参考的是该作者测试时还采用了集成方法,即一张图片操作成10张,让模型判断并求平均。

九、调用摄像头,在线demo

步骤:

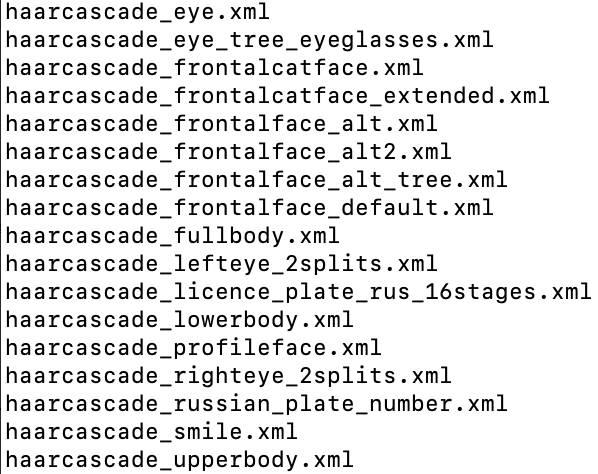

1)调用opencv自带的人脸识别器

一般使用:

在这里我们用alt2来实现。

2)调用系统摄像头拍摄出人脸图片

3)对人脸图片进行预处理

4)将处理完的图片传入模型

5)将模型计算出来的结果反馈至运行窗口界面

需注意:

1)模型中并没有加入softmax层,最后输出的是交叉熵损失。如果要在每帧图片里显示成每个类别的概率,需给输出结果加一个softmax:p_result = F.softmax(result, dim=1)

2)在调用cascade_classifier.detectMultiScale这个函数时,建议设为1.1,否则可能导致人脸识别不够敏感

参考:

https://www.cnblogs.com/XDU-Lakers/p/10587894.html

https://blog.csdn.net/mathlxj/article/details/87920084

https://github.com/tgpcai/Microexpression_recognition